Earlier this week, I mentioned the exciting hackathon that produced a moderately-faithful reimagining of the world’s first Web browser. I was sufficiently excited about it that I not only blogged here but I also posted about it to MetaFilter. Of course, the very first thing that everybody there did was try to load MetaFilter in it, which… didn’t work.

People were quick to point this out and assume that it was something to do with the modernity of MetaFilter:

honestly, the disheartening thing is that many metafilter pages don’t seem to work. Oh, the modern web.

Some even went so far as to speculate that the reason related to MetaFilter’s use of CSS and JS:

CSS and JS. They do things. Important things.

This is, of course, complete baloney, and it’s easy to prove to oneself. Firstly, simply using the View Source tool in your browser on a MetaFilter page reveals source code that’s quite comprehensible, even human-readable, without going anywhere near any CSS or JavaScript.

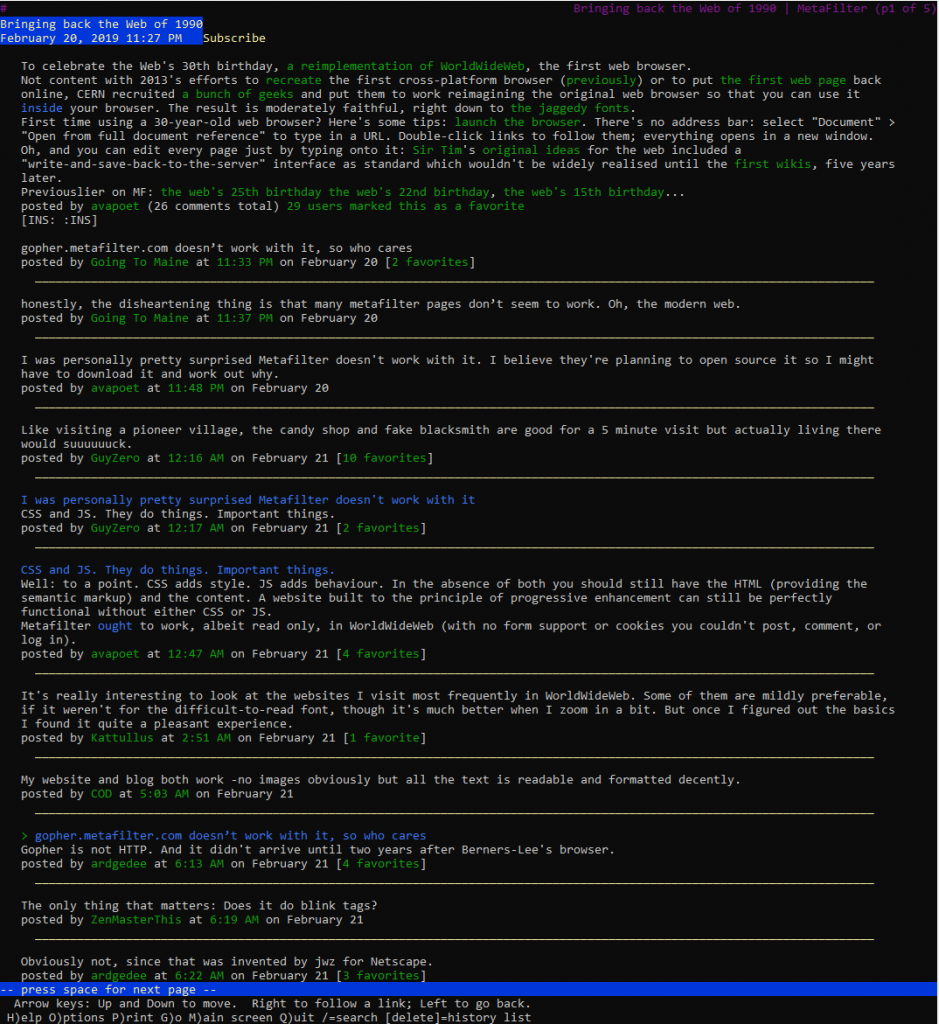

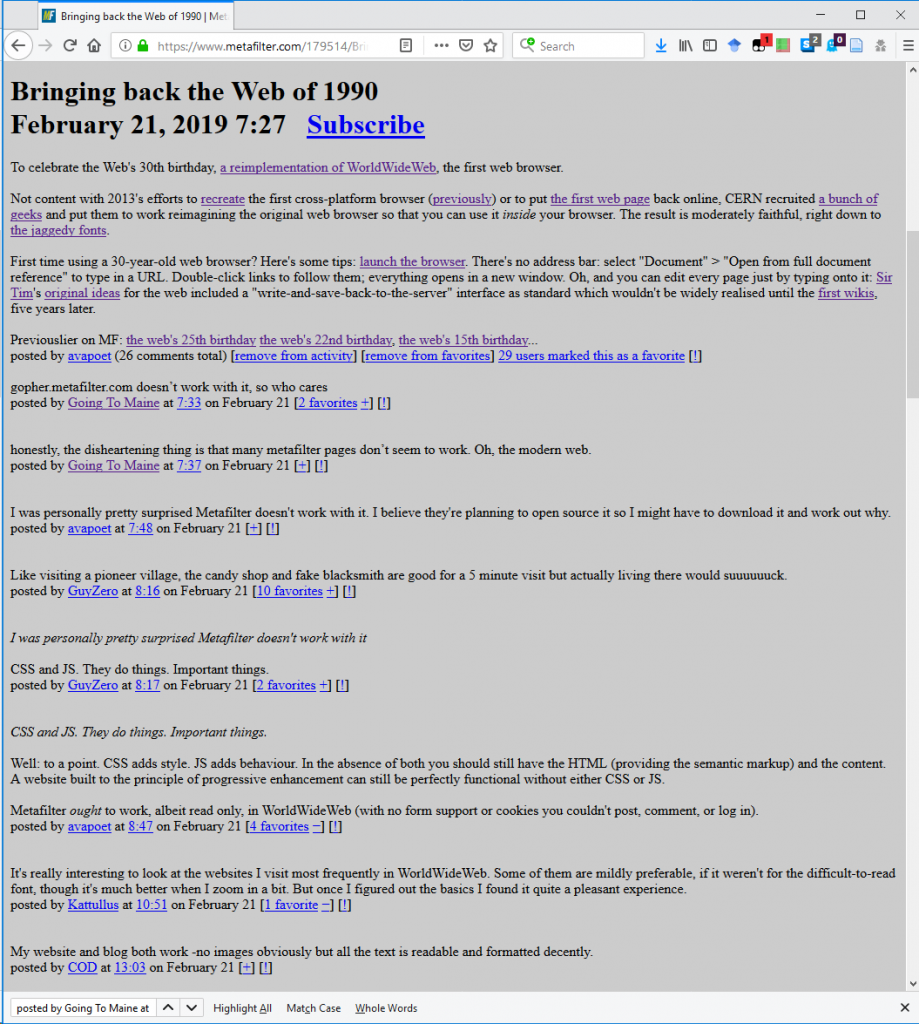

Secondly, it’s pretty simple to try browsing MetaFilter without CSS or JavaScript enabled! I tried in two ways: first, by using Lynx, a text-based browser that’s never supported either of those technologies. I also tried by using Firefox but with them disabled (honestly, I slightly miss when the Web used to look like this):

And thirdly: the error code being returned by the simulated WorldWideWeb browser is a HTTP code 500. Even if you don’t know your HTTP codes (I mean, what kind of weirdo would take the time to memorise them all anyway <ahem>), it’s worth learning this: the first digit of a HTTP response code tells you what happened:

- 1xx means “everything’s fine, keep going”;

- 2xx means “everything’s fine and we’re done”;

- 3xx means “try over there”;

- 4xx means “you did something wrong” (the infamous 404, for example, means you asked for a page that doesn’t exist);

- 5xx means “the server did something wrong”.

Simple! The fact that the error code begins with a 5 strongly implies that the problem isn’t in the (client-side) reimplementation of WorldWideWeb: if this had have been a CSS/JS problem, I’d expect to see a blank page, scrambled content, “filler” content, or incomplete content.

So I found myself wondering what the real problem was. This is, of course, where my geek flag becomes most-visible: what we’re talking about, let’s not forget, is a fringe problem in an incomplete simulation of an ancient computer program that nobody uses. Odds are incredibly good that nobody on Earth cares about this except, right now, for me.

Luckily, I spotted Jeremy’s note that the source code for the WorldWideWeb simulator was now available, so I downloaded a copy to take a look. Here’s what’s happening:

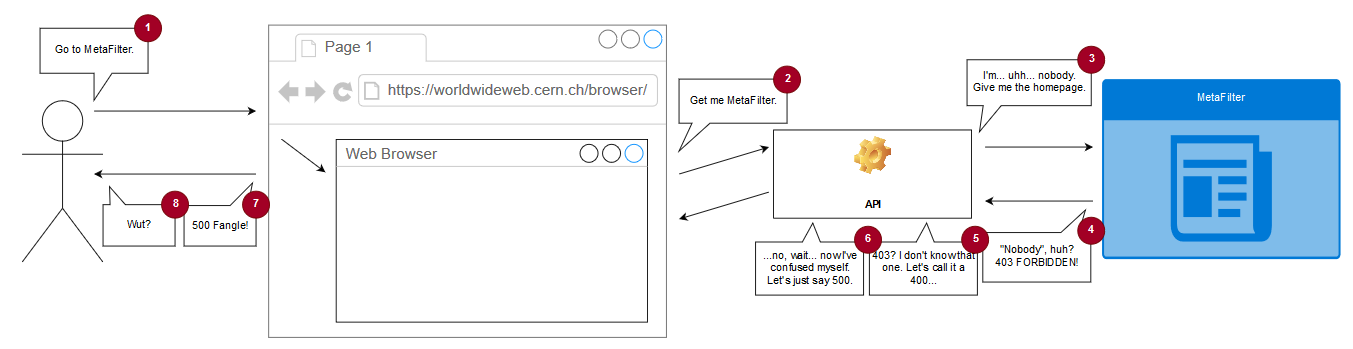

- The (simulated) copy of WorldWideWeb is asked to open a document by reference, e.g. “https://www.metafilter.com/”.

- To work around same-origin policy restrictions, the request is sent to an API which acts as a proxy server.

- The API makes a request using the Node package “request” with this line of code:

request(url, (error, response, body) => { ... }). When the first parameter to request is a (string) URL, the module uses its default settings for all of the other options, which means that it doesn’t set the User-Agent header (an optional part of a Web request where the computer making the request identifies the software that’s asking). - MetaFilter, for some reason, blocks requests whose User-Agent isn’t set. This is weird! And nonstandard: while web browsers should – in RFC2119 terms – set their User-Agent: header, web servers shouldn’t require that they do so. MetaFilter returns a 403 and a message to say “Forbidden”; usually a message you only see if you’re trying to access a resource that requires session authentication and you haven’t logged-in yet.

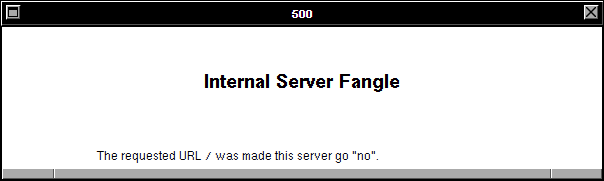

- The API is programmed to handle response codes 200 (okay!) and 404 (not found), but if it gets anything else back it’s supposed to throw a 400 (bad request). Except there’s a bug: when trying to throw a 400, it requires that an error message has been set by the request module and if there hasn’t… it instead throws a 500 with the message “Internal Server Fangle” and no clue what actually went wrong. So MetaFilter’s 403 gets translated by the proxy into a 400 which it fails to render because a 403 doesn’t actually produce an error message and so it gets translated again into the 500 that you eventually see. What a knock-on effect!

The fix is simple: simply change the line:

request(url, (error, response, body) => { ... })

to:

request({ url: url, headers: { 'User-Agent': 'WorldWideWeb' } }, (error, response, body) => { ... })

This then sets a User-Agent header and makes servers that require one, such as MetaFilter, respond appropriately. I don’t know whether WorldWideWeb originally set a User-Agent header (CERN’s source file archive seems to be missing the relevant C sources so I can’t check) but I suspect that it did, so this change actually improves the fidelity of the emulation as a bonus. A better fix would also add support for and appropriate handling of other HTTP response codes, but that’s a story for another day, I guess.

I know the hackathon’s over, but I wonder if they’re taking pull requests…