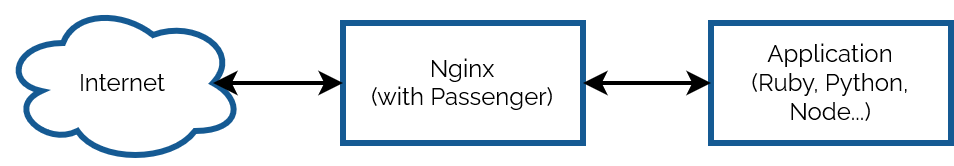

Suppose you’re running an application on a Passenger + Nginx powered server and you want to add caching.

Perhaps your application has a dynamic, public endpoint but the contents don’t change super-frequently or it isn’t critically-important that the user always gets up-to-the-second accuracy, and you’d like to improve performance with microcaching. How would you do that?

Where you’re at

Your configuration might look something like this:

1 2 3 4 5 6 7 |

server { # listen, server_name, ssl, logging etc. directives go here # ... root /your/application; passenger_enabled on; } |

What you’re looking for is proxy_cache and its sister directives, but you can’t just

insert them here because while Passenger does act act like an upstream proxy (with parallels like e.g. passenger_pass_header which mirrors the behaviour of proxy_pass_header), it doesn’t provide any of the functions you need to implement proxy caching

of non-static files.

Where you need to be

Instead, what you need to to is define a second server, mount Passenger in that, and then proxy to that second server. E.g.:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# Set up a cache proxy_cache_path /tmp/cache/my-app-cache keys_zone=MyAppCache:10m levels=1:2 inactive=600s max_size=100m; # Define the actual webserver that listens for Internet traffic: server { # listen, server_name, ssl, logging etc. directives go here # ... # You can configure different rules by location etc., but here's a simple microcache: location / { proxy_pass http://127.0.0.1:4863; # Proxy all traffic to the application server defined below proxy_cache MyAppCache; # Use the cache defined above proxy_cache_valid 200 3s; # Treat HTTP 200 responses as valid; cache them for 3 seconds proxy_cache_use_stale updating; # (Optional) send outdated response while background-updating cache proxy_cache_lock on; # (Optional) only allow one process to update cache at once } } # (Local-only) application server on an arbitrary port number to act as the upstream proxy: server { listen 127.0.0.1:4863; root /your/application; passenger_enabled on; } |

The two key changes are:

- Passenger moves to a second

serverblock, localhost-only, on an arbitrary port number (doesn’t need HTTPS, of course, but if your application detects/”expects” HTTPS you might need to tweak your headers). - Your main

serverblock proxies to the second as its upstream, and you can add whatever caching directives you like.

Obviously you’ll need to be smarter if you host a mixture of public and private content (e.g. send Vary: headers from your application) and if you want different cache

durations on different addresses or types of content, but there are already great guides to help with that. I only wrote this post because I spent some time searching for (nonexistent!)

passenger_cache_ etc. rules and wanted to save the next person from the same trouble!

0 comments